Is AI truly fair for everyone?

The filters, translation apps, and voice recognition systems we use daily often erase certain voices or fail to recognize certain faces.

This article explores "AI Inequality," examining how technology creates new forms of discrimination.

—

A New Era of Discrimination by Algorithms

—

"Why doesn't AI recognize my face?"

Dr. Joy Buolamwini from MIT Media Lab discovered that facial recognition software didn't recognize her face until she wore a white mask.

"This technology didn't see me

because Black women like me weren't represented in the data."

- Joy Buolamwini, TED Talk, 2016 -

Joy analyzed commercial facial recognition systems by IBM, Microsoft, and Face++, revealing shocking results:

• Error rate for white men: 0.8%

• Error rate for women of color: 34.7%

(Source: Gender Shades Project, MIT Media Lab, 2018)

—

Whose Data Does AI Learn From?

—

AI learns and makes decisions based on data.

But what if this data mostly represents one group, like white males?

AI then views this group as the "standard," labeling everyone else as an "exception."

This is called Algorithmic Bias.

—

Examples of AI Discrimination

—

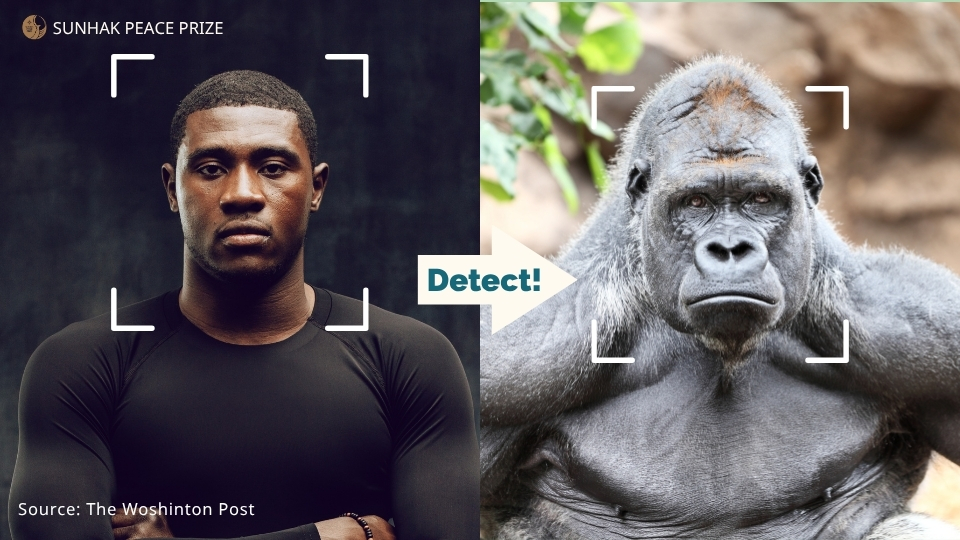

• Facial Recognition Errors

IBM, Microsoft, Amazon systems have significantly lower accuracy rates for Black and Asian women.

• Bias in Hiring Algorithms

Amazon scrapped an AI hiring tool that discriminated against women, trained mostly on resumes from men.

(Source: Reuters, Oct 10, 2018)

• Google Photos' Racist Tagging

Google Photos labeled Black people as "gorillas," leading to controversy and removal of the feature.

(Source: The Guardian, Jan 12, 2018)

These biases occur because AI learns human societal prejudices, legitimizing them through its actions.

—

AI's Biased Standards

—

AI technology deeply influences our daily lives through filters, translators, and voice recognition systems.

However, these technologies often unconsciously set biased "standards," excluding those who differ.

① TikTok Filters: Whose Face is the Standard?

Southeast Asian users often say:

"TikTok filters lighten my skin, enlarge my eyes, and raise my nose bridge. It doesn't feel like my face anymore."

This isn't just cosmetic. It reveals unconscious racial biases, reinforcing a white-centric beauty standard.

“These filters increasingly impose narrow standards of how we should look, often white, slim, and pale."

- MIT Technology Review, Apr 2, 2021 -

(Source : TikTok @ jmaham88 / TikTok Filter: Before & After)

② AI Translators’ Language Bias

Translation tools like Google Translate and DeepL work well with high-resource languages (English, French, German) but often mistranslate and distort African languages (Swahili, Yoruba) and Asian minority languages (Tagalog).

“Large language models perform well for English speakers but poorly for Vietnamese speakers, and even worse for Nahuatl speakers."

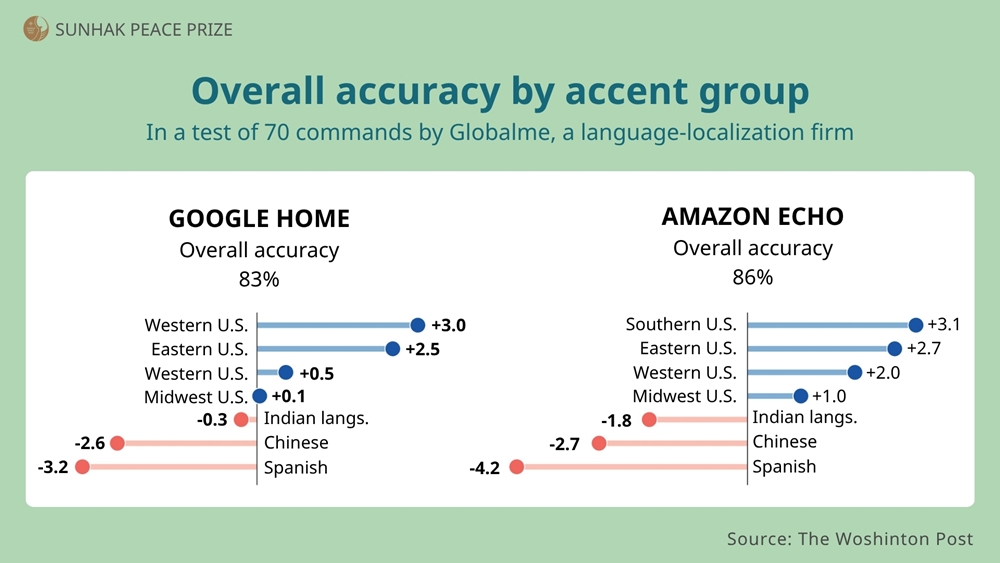

③ Accent Bias in Voice Recognition

Globalme reports Google Home and Amazon Echo perform well with American and British accents but struggle significantly with Spanish, Indian, and Chinese accents.

• Southern US accents: +3.1% accuracy

• Spanish accents: -4.2% accuracy

AI voice recognition performs differently based on accents.

While North American accents are recognized relatively accurately, Spanish, Indian,

and Chinese accents experience significantly lower recognition rates.

(Source: Washington Post, 2018.12.21 / Globalme)

"Voice assistants often fail to understand non-standard English accents.

This isn't merely a technical problem but one of inclusion and exclusion."

- Washington Post, Dec 21, 2018 -

—

How AI Inequality Happens

—

Global tech companies collect data from developing countries without involving local people in designing or benefiting from the technologies.

This phenomenon is called Data Colonialism.

Factor | Global North (Developed nations/companies) | Global South (Developing nations/users) |

Data Collection | ✓ Active Collection | ✓ Passive Provision |

Design Involvement | ✓ Tech-driven | ✕ Little to None |

| Profit Sharing | ✓ Corporate Dominance | ✕ Little Benefit |

| Tech Standards | White, English-centric | Exclusion of Local Languages & Cultures |

The World Bank warns:

"Many countries and citizens have little real control

over how their data is collected and used."

- World Bank, 2021 -

To learn more about the digital divide, check the following articles: ↓↓↓ |

—

What is the Global Community Doing?

—

In 2021, UNESCO unanimously adopted the 『Recommendation on the Ethics of Artificial Intelligence,』 outlining core principles:

• Fairness & Non-discrimination: AI must fairly represent everyone, regardless of race, gender, language, accent, or region.

• Privacy & Data Protection: Users must maintain control over their personal data.

• Sustainability & Inclusiveness: AI should benefit those on economic and cultural margins.

• Explainability: AI results must be transparent and understandable to users.

—

Gen Z and AI: Users and Victims

—

Gen Z grew up viewing AI as normal rather than novel.

But what if:

• Your face keeps being distorted?

• Your language is constantly mistranslated?

• Your favorite content gets labeled "non-mainstream"?

This isn't a matter of preference but one of identity exclusion.

—

What We Can Do

—

Addressing AI inequality starts with critical awareness:

▣ Critically evaluate technology

• Examine how algorithms limit your choices

• Check bias in filters and translations

▣ Demand AI ethics education

• Advocate for "AI Ethics" and "Digital Citizenship" courses in schools

▣ Support inclusive technology

• Choose technologies respectful of diverse languages and accents

• Support businesses and startups creating inclusive tech

▣ Join international campaigns

• Share UNESCO’s AI ethics recommendations

• Raise awareness through hashtags like #FilterOutBias

—

Making Technology Inclusive

—

AI isn't neutral.

It reflects the biases and values of its creators.

We must ask ourselves:

"Who am I within AI?"

This question begins our journey toward equitable technology.

"Technology is not neutral.

It reflects the values we choose."

- Shoshana Zuboff, Harvard Business School Professor -

⟪Key Points in One Short Video!⟫

↓↓↓

Written by Yeonjae Choi

Director of Planning

Sunhak Peace Prize Secretariat

▣ References and Sources • MIT Media Lab (2018), Gender Shades Project • Reuters (2018.10.10), Amazon scrapped AI recruiting tool that showed bias against women • The Guardian (2018.01.12), Google Photos once labelled black people ‘gorillas’. Now image labelling is back • MIT Technology Review (2021.04.02), How beauty filters are changing the way young girls see themselves • Stanford University (2025.05), How AI is leaving non-English speakers behind • The Washington Post (2018.12.21), Alexa has a problem with accents • World Bank (2021), World Development Report: Data for Better Lives • UNESCO (2021), Recommendation on the Ethics of Artificial Intelligence |